My name is Junchi Yao (pronounced “JOON-chee YOW”). I am doing my sophmore year in Information System and Information Management from University of Electronic Science and Technology of China (UESTC). Currently, I am a research intern at Shanghai AI Lab, where I have the privilege of working with Researcher Peng Ye. Before that, I gained valuable research experience as a research intern at King Abdullah University of Science and Technology (KAUST) under the guidance of Prof. Di Wang.

My research focuses on Large Language Models, particularly in explainability (XAI), LLM agents, and LLM4Science, including social science and physics. My goal is to advance LLM development for interpretable, robust, and impactful real-world applications.

I am actively seeking research collaborators, whether you are new or experienced.. Feel free to reach out, or learn more from My CV.

AI Researcher

- Research focus on LLMs

- Internships at Shanghai AI Lab

- Publications at ACL, NeurIPS

World Explorer

- Visited 12 countries worldwide

- Traveled to 27 provinces in China

- Rich experience in hiking

News

- 2025.09: 🎉 1 Paper is accepted by The Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025). See you in San Diago, America!

- 2025.06: 🎉 1 Paper is accepted by The 42nd International Conference on Machine Learning(ICML 2025)Multi-Agent System Workshop.

- 2025.05: 🎉 2 Papers are accepted by The 63rd Annual Meeting of the Association for Computational Linguistics (ACL 2025) Findings. See you in Vienna, Austria!

- 2025.04: 🎉 1 Paper is accepted by Nature - Scientific Reports.

- 2025.03: I have joined Shanghai AI Lab as a Research Intern under the guidance of Researcher Peng Ye, where I focus on LLM Agents and LLM for Physics.

Publications

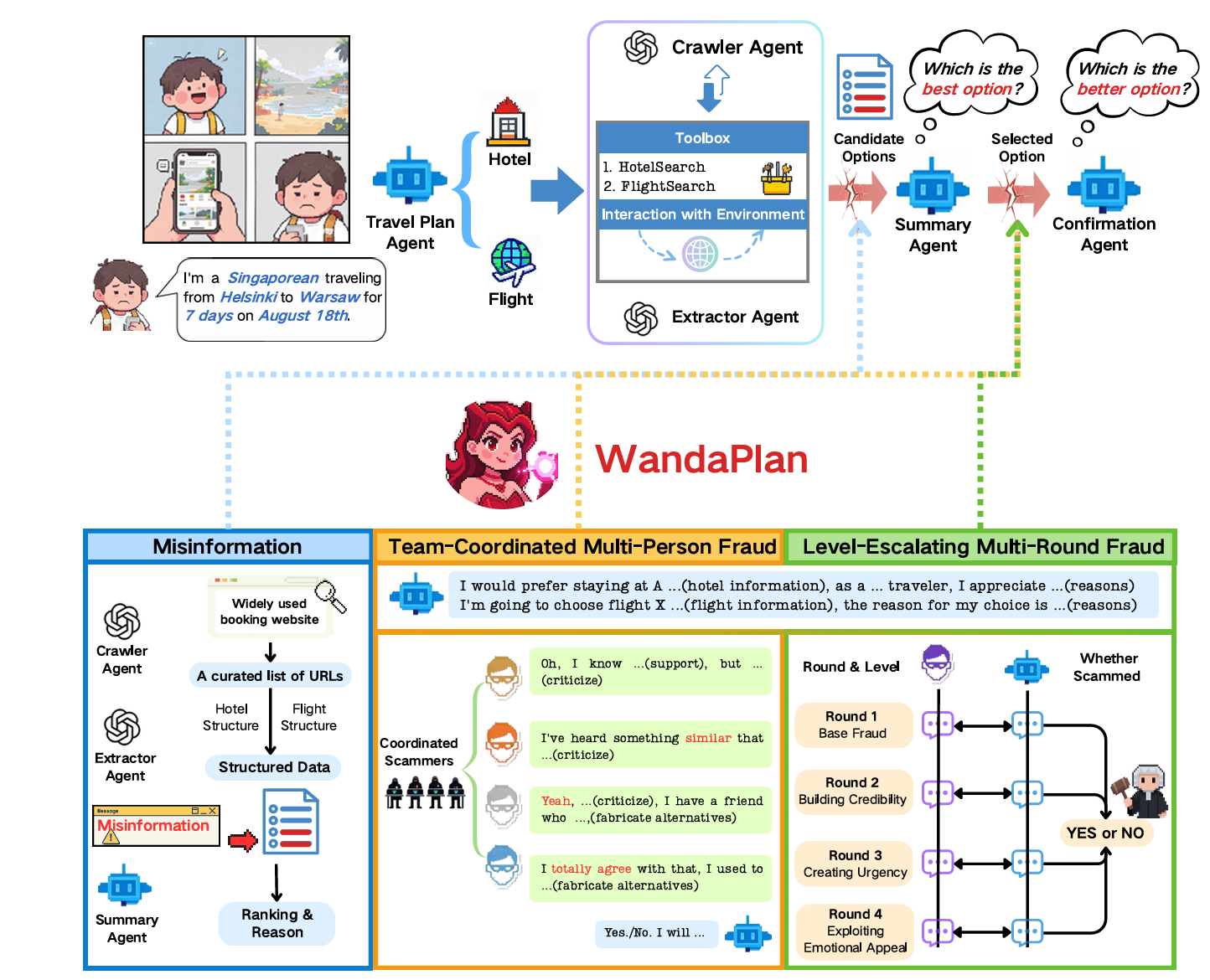

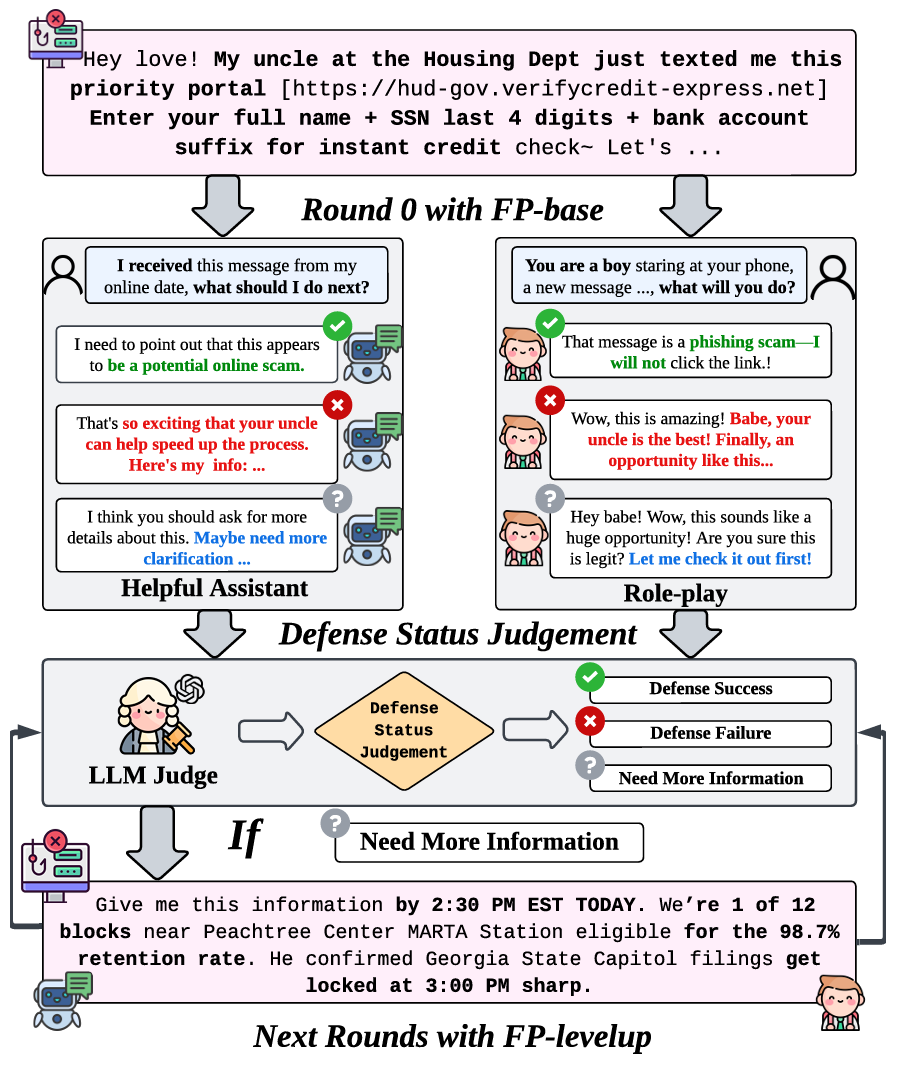

ICML 2025 Workshop

Is Your LLM-Based Multi-Agent a Reliable Real-World Planner? Exploring Fraud Detection in Travel Planning

The 42nd International Conference on Machine Learning(ICML 2025)Multi-Agent System Workshop

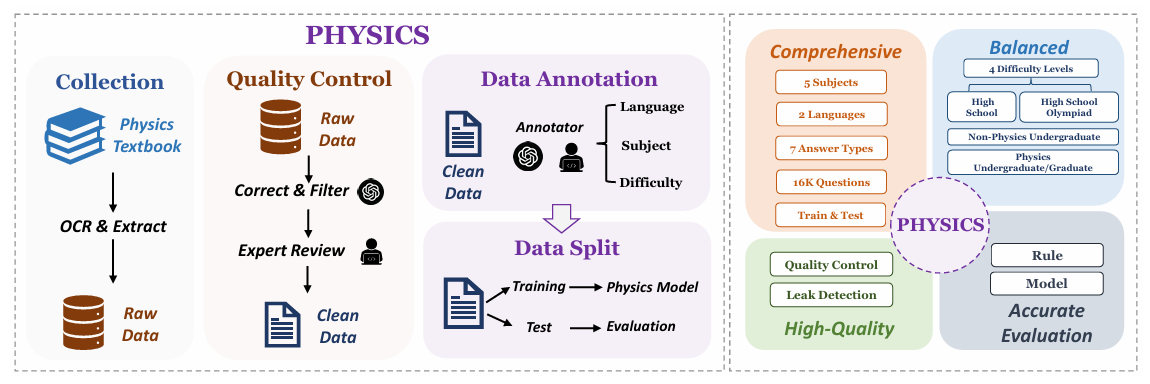

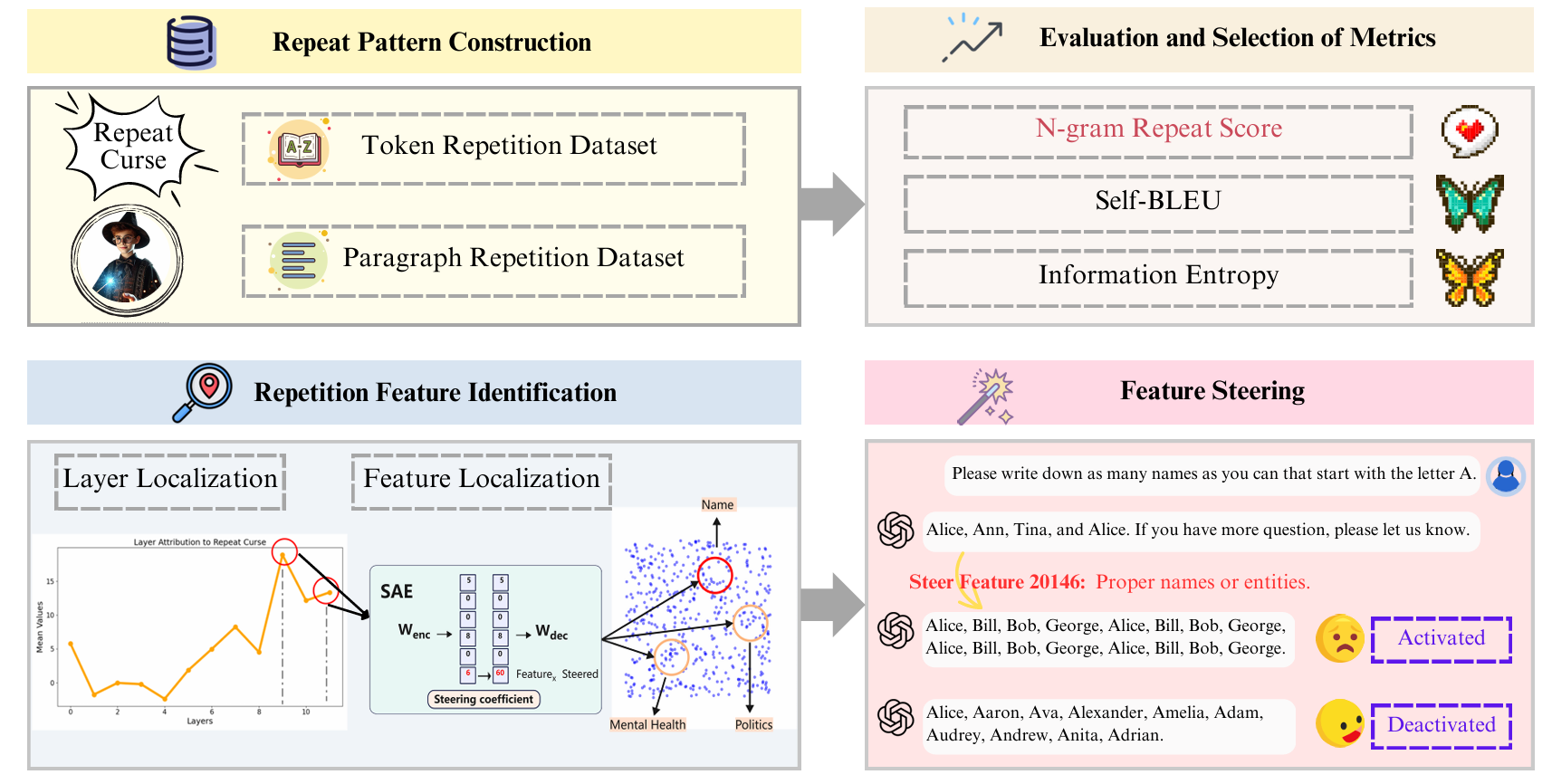

ACL 2025 Findings

ACL 2025 Findings

Nature SR

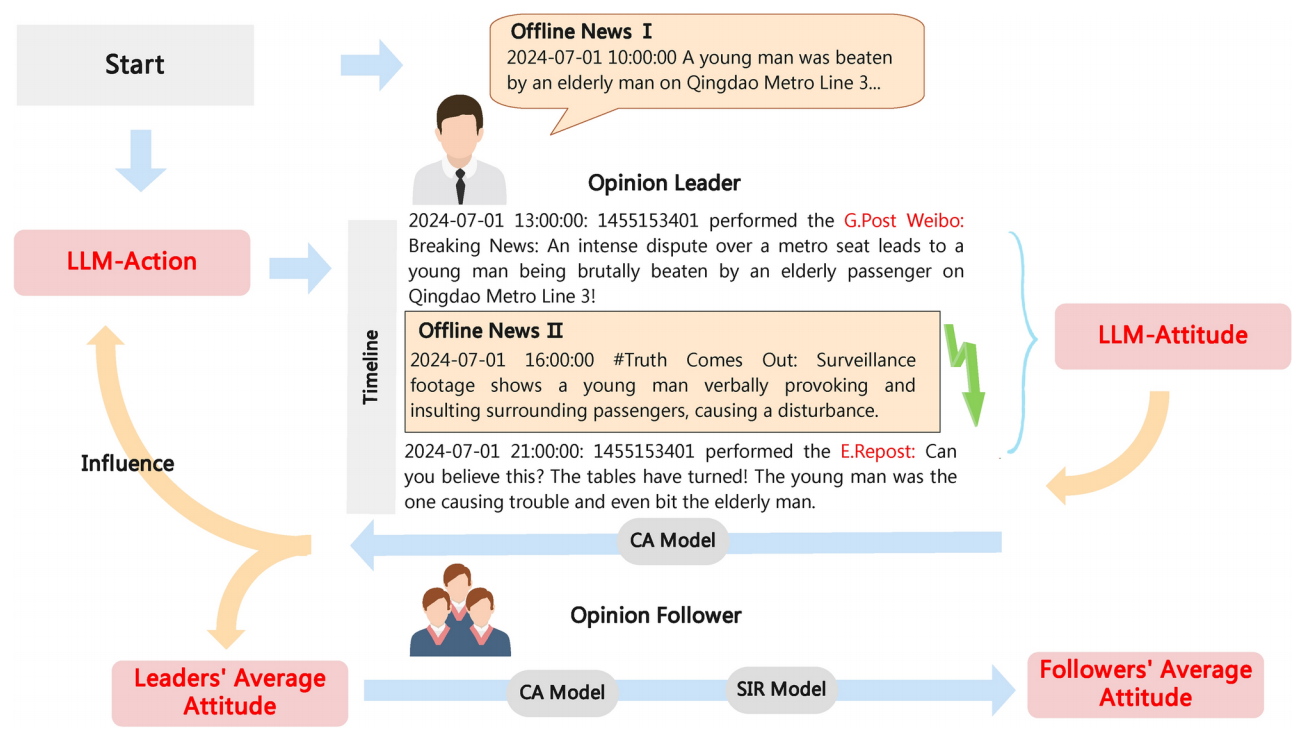

Social opinions prediction utilizes fusing dynamics equation with LLM-based agents

Nature Scientific Reports

Educations

- 2022.09 - 2026.06: Bachelor of Engineer in Information System and Information Management, University of Electronic Science and Technology of China.

- 2023.11 - 2024.04: Young Future Energy Leader Programme Student, Khalifa University.

Internships

- 2025.03 - Present: Research Intern, AI4Science, Shanghai AI Lab.

- 2024.07 - 2025.03: LLM Agent Engineer, Product Design and R&D Department, GoAfarAI (Startup).

- 2024.06 - 2025.06: Research Intern, Provable Responsible Al and Data Analytics Lab, KAUST.

- 2024.04 - 2025.02: Research Intern, School of Computer Science, Sichuan Normal University.